Duplicate content is a problem with many websites, and most webmasters don’t realise they are doing anything wrong. Most search engines want to provide relevant results for their users, it’s how Google got successful. If the search engine was to return five identical pages on the same page of the search results, it’s not likely to be useful to the searcher. Many search engines have filters in place to remove the duplicate listings – this keeps their search results clean, and is overall a good feature. From a webmaster’s point of view however, you don’t know which copy of the content the search engine is hiding, and it can put a real damper on your marketing efforts if the search engines won’t show the copy you are trying to promote. A common request is to be able to remove or redirect the “index.php” from appearing in the url. This is possible only with server-side technology like “.htaccess” configuration files or your main server config by using the Mod_Rewrite Rewrite Module. Duplicate content occurs when the search engine finds identical content at different URLs like:

www and non-www

http://www.iwebdev.it and http://iwebdev.it

In most cases these will return the same page, in other words, a duplicate of your entire site.

root and index

http://www.iwebdev.it (root) and http://iwebdev.it/index.php

Most people’s homepages are available by typing either URL – duplicate content.

Session IDs

http://www.iwebdev.it/project.php?PHPSESSID=24FD6437ACE578FEA5745

This problem effects many dynamic sites, including PHP, ASP and Cold Fusion sites. Many forums are poorly indexed because of this as well. Session IDs change every time a visitor comes to your site. In other words, every time the Search engine indexes your site, it gets the same content with a different URL. Amazingly, most search engines aren’t clever enough to detect this and fix it, so it’s up to you as a webmaster.

One page, multiple URLs

http://www.iwebdev.it/project?category=web&product=design and http://www.iwebdev.it/project.php?category=software&product=design

A product may be allocated to more than one category – in this case the “product detail” page is identical, but it’s available via both URLs.

Removing Duplicate Content

Having duplicate content on your site can make marketing significantly more difficult, especially when you are marketing the non-www version and Google is only showing the www version. Because you can’t tell the search engines which is the “original” copy, you must prevent any duplicate content from occuring on your site.

www and non-www

I prefer to use the www version of my domain (no particular reason, it seems to look better on paper). If you are using Apache as your web server, you can include the following lines in your .htaccess file (change the values to your own of course).

RewriteCond %{HTTP_HOST} ^iwebdev.it

RewriteRule (.*) http://www.iwebdev.it/$1 [R=301,L]

If your webhost does not let you edit the .htaccess file, I would consider finding a new host. When it comes to removing duplicate content and producing search engine friendly URLs, Apache’s .htaccess is too good to ignore. If your website is hosted on Microsoft IIS, I recommend ISAPI Rewrite instead.

Remove all reference to “index.php”

Your homepage should never be referred to as index.htm, index.php, index.asp etc. When you build incoming links, you will always get links to www.iwebdev.it – your internal links should always be the same. One of my sites had a different pagerank on “/” (root) and “index.php” because the internal links were pointing to index.php, and creating duplicate content. Why go to the trouble of promoting two “different” pages at half strength when you can promote a single URL at full strength? After you have removed all references to index.php you should set up a 301 redirect (below) to redirect index.htm to / (root).

Remove Session IDs

I can give advice for PHP users, ASP and CF users should do their own research on exactly how to remove these. With PHP, if the user does not support cookies, the Session ID is automatically inserted into the URL, as a way of maintaining state between pages. Most search engines don’t support cookies, which means they get a different PHPSESSID in the URL every time they visit – this leads to very ugly indexing. There is no ideal solution to this, so I have to compromise. When sessions are a requirement for the website, I would rather lose a small number of visitors who don’t have cookies, than put up with PHPSESSID in my search engine listings (and potentially lose a lot more visitors). To disable PHPSESSID in the URL, you should insert the following code into .htaccess

php_value session.use_only_cookies 1

php_value session.use_trans_sid 0

This will mean visitors with cookies turned off won’t be able to use any features of your site that use sessions, eg logging in, or remembering form data etc.

Ensure all database generated page have unique URLs

This is somewhat more complicated, depending how your site is setup. When I design pages, I’m always wary of the “one page, one url” rule, and I design my page structure accordingly. If a product belongs to 2 categories, I ensure that both categories link to the same URL, or modify the content significantly on both versions of the page so it’s not “identical” in the eyes of the search engine.

301 Redirections

A 301 redirect is the correct way of telling the Search engines that a page has moved permanently. When you still want the non-www domain name to work, you should 301 redirect the visitor to the www domain. The visitor will see the address change and Search Engines will know to ignore the non-www and use the www instead. Use your .htaccess to 301 redirect visitors from index.htm to / and any other pages that get renamed. eg.

redirect 301 /index.htm http://www.iwebdev.it/

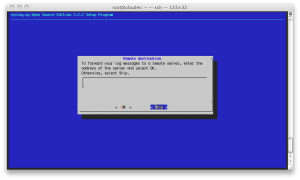

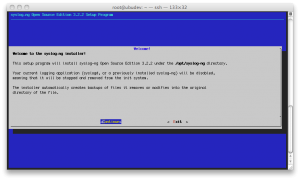

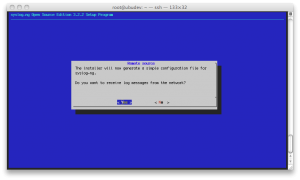

The last step asks user if he wants forward the log messages to a remote server; we choose “skip”.

The last step asks user if he wants forward the log messages to a remote server; we choose “skip”.